# Aleo

623.59K

- Reward

- 2

- 1

- Repost

- Share

QuietHappinessEarnsHi :

:

0.03- Reward

- 3

- Comment

- Repost

- Share

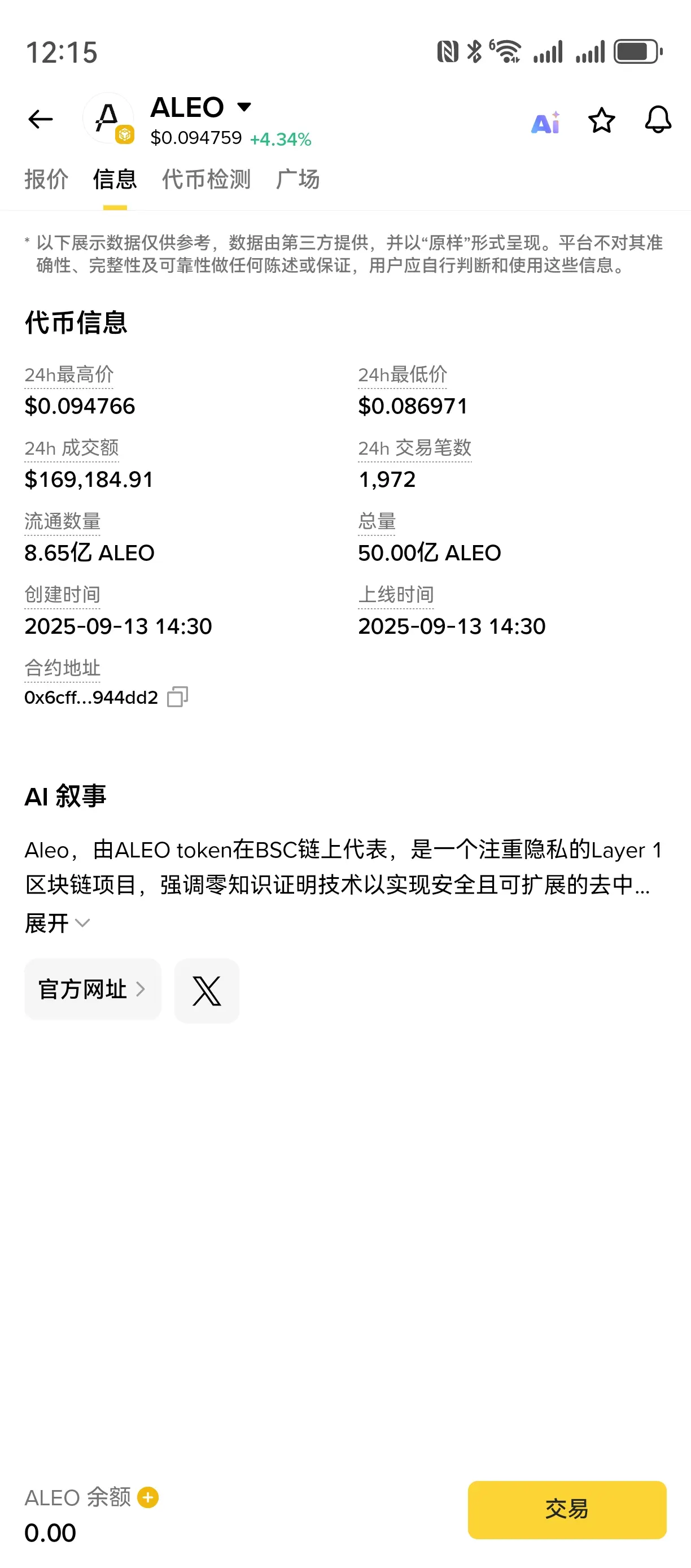

#ALEO After one year of adjustment, ALEO has not yet reached the bottom. The estimated market value based on the cost of building ALEO is around $8 million, which is reasonable. With weak rebound momentum, it is expected to start rebounding after reaching the bottom of this cycle around October 2026, with prices fluctuating between 0.01 and 0.015. After 2030, it is expected to reach between 0.018 and 0.02.

ALEO-6,44%

- Reward

- like

- Comment

- Repost

- Share

- Reward

- like

- 1

- Repost

- Share

ZeJun888 :

:

No pump, no bagholders.- Reward

- like

- 3

- Repost

- Share

HoldingVMyWeb3wra :

:

Retrieve it nowView More

- Reward

- like

- 2

- Repost

- Share

bee2025 :

:

ae2 is all shut down…View More

Load More

Join 40M users in our growing community

⚡️ Join 40M users in the crypto craze discussion

💬 Engage with your favorite top creators

👍 See what interests you

Trending Topics

356.85K Popularity

105.57K Popularity

201.55K Popularity

10.96M Popularity

20.36K Popularity

4.04K Popularity

183.56K Popularity

544.78K Popularity

19.61K Popularity

378.02K Popularity

54.79K Popularity

85.46K Popularity

14.15K Popularity

31.71K Popularity

20.3K Popularity

News

View MoreTraditional Finance Alert: XTIUSD Has Risen Over 6%

6 m

Goldman Sachs: U.S. stocks need to pull back first before achieving sustained gains

25 m

GT 24H Up 3.05%, current price 7.08 USDT

38 m

The US Dollar Index rose sharply by 0.79%, closing at 98.382.

48 m

The probability that the Federal Reserve will keep interest rates unchanged in March is 97.5%

59 m

Pin